Generative Adversarial Nets

Ian J. Goodfellow, Jean Pouget-Abadie, Yoshua Bengio, etc.

10 Jun 2014

Deep Generative Models

The ultimate goal of generative model is to approximate the ground-truth distribution of the data by using a neural network, and generate a data by sampling from the distribution. Surprisingly, generative models weren't that useful until among 2015, but it have recently improved enormously since DCGAN (the paper which we will discuss later). Before GAN was invented, there have been few successful generative models such as Auto-regressive model (AR) and Variational Auto Encoder (VAE). However, these backpropagation based models have some strict restrictions, such as that it requires well-behaved gradient and tractable likelihood function, which became its huge disadvantage. In order to sidestep these difficulties, this paper suggests a training architecture called GAN which estimates generative models via an adversarial process, in which training two models simultaneously.

GAN

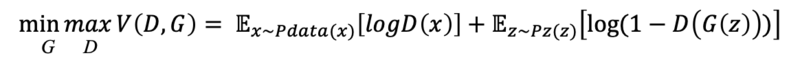

The logical sequence of how the proposed adversarial nets framework works is quite simple. The paper treats the idea as a team of counterfeiters (the generative model) and police (the discriminative model). In according analogous situation, counterfeiters try their best to produce fake currency while police are responsible to detect fake currency from the real ones. Competition between two players improves both of the method until generative model G produces currency which is indistinguishable the genuine currency. Likewise, the generative model G and the discriminative model D are optimized (i.e. trained in epochs of an assigned number of iteration) until learnt distribution of the generator p_G becomes exactly same as the genuine distribution p_Data. In other words, D and G play the following two-player minimax game with value function V(G, D):

The paper suggests some basis about the global optimality of the according equation and the convergence of the algorithm, which proves that adequately optimizing the equation leads to the network's ultimate goal (i.e. p_G = p_Data). Although the generative adversarial nets has advantages on the process of calculation, the output seems unlikely to outperform prior studies. However, still this paper is more than meaningful because it has demonstrated the viability of the adversarial modeling framework, hence opening up the gate for various generative models.

DCGAN

DCGAN (Deep Convolutional GAN) is a deep generative architecture which uses convolutional neural network (CNN). Despite the fact that GAN have brought an intelligent idea to generative models, its output were not that impressive. However, DCGAN have improved the performance enormously on two points: (1) the outputs were always fairly good on every environment, which means the model became stable. (2) the models trained through DCGAN provides arithmatic operations (e.g. word2vec), and become semantically sturdy. For example, models based on DCGAN understands that "king-man+women" becomes a "queen". Also, by looking into the images while training, it is notable that modifying the vector in the hidden space naturally, softly, and gradually changes the image into another one.

Likewise, the idea of Generative Adversarial Nets is still evolving, and outputs are becoming more precise. One application of GAN I liked were Least Squares GAN (LSGAN) which enhances the performance by giving a penalty to samples which stays far from the decision boundary. Also, there are some other GANs which achieves higher performance on various environments, and which outputs a specific result conditionally. Even though GAN still has some shortcomings, this idea seems to have much more potential than what it has achieved so far.

'Methodologies' 카테고리의 다른 글

| STILTs: Supplementary Training on Pretrained Sentence Encoders (0) | 2023.08.26 |

|---|---|

| What Is Wrong With Backpropagation (0) | 2023.03.25 |

| Detour for Domain-Specific Tasks Avoiding Adaptive Pre-training (0) | 2023.02.07 |

| Google : The Most Resilient System for the Most Intricately Flawed Web Artifact (0) | 2022.10.02 |