NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis

Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T, Barron, Ravi Ramamoorthi, Ren Ng

3 Aug 2020

NeRF; Neural Randiance Field

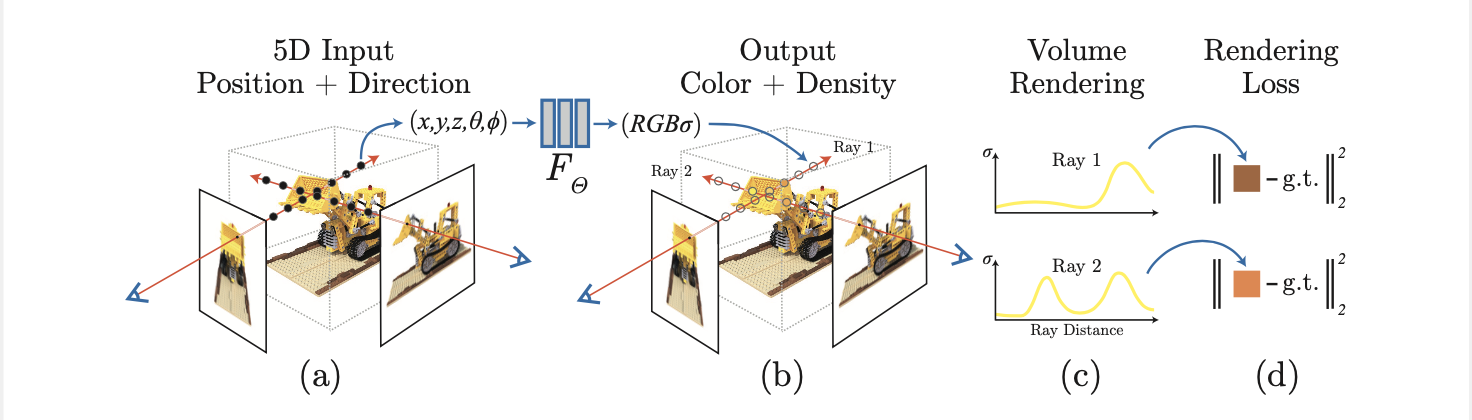

Neural Randiance Field (NeRF) is a sophisticated and novel method that addresses the long-standing problem of view synthesis. Prior to NeRF, there have been a lot of works such as using deep convolutional networks to output discretized voxel representations. Unlike this previously-dominant model, NeRF optimizes a deep fully-connected neural network (MLP) to render a 3D scene from a set of static scenes represented as a continuous 5D scene represention (the spatial location (ⓧ, ⓨ, ⓩ) and the view direction (theta, phi)).

To render the NeRF scene from a particular viewpoint, the paper:

- marched camera rays through the scene to generate a sampled set of 3D points.

- used those points and their corresponding 2D viewing directions as input to the neural network to produce an output set of colors and densities.

- used classical volume rendering techniques to accumulate those colors and densities into a 2D image.

Additionally, note that volume density is predicted soley based on the location (ⓧ, ⓨ, ⓩ), while the RGB color is based of both location and view direction. This is to help the 3D scene be constructed in a multiview consistent way.

Neural Radiance Field Scene Representation

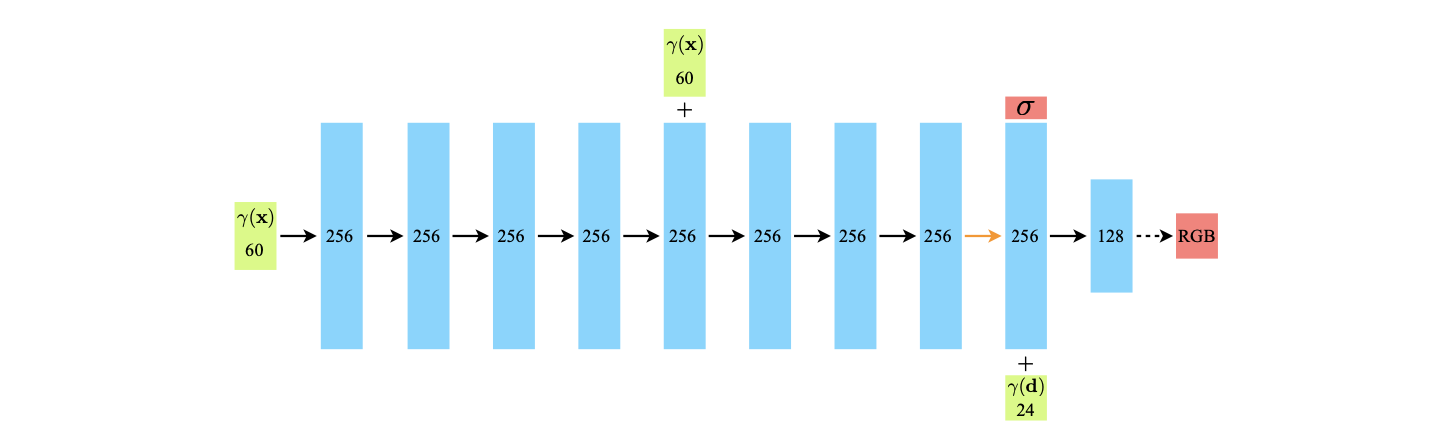

As you can see in the image above, NeRF basically trains the network architecture which passes the positional encoding of the input location and the view direction through fully-connected layers. You can also see that the volume density (gamma) is not affected by the view direction.

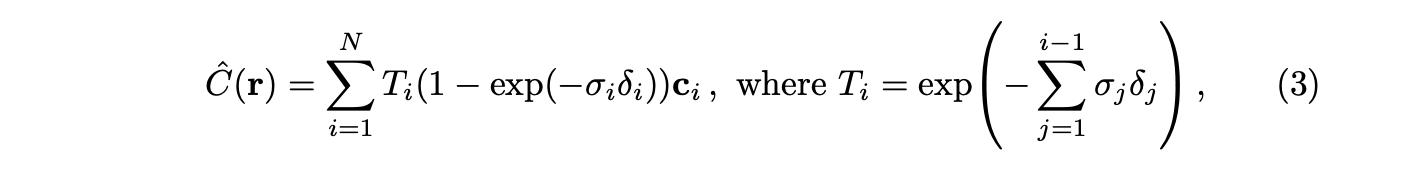

The actual way to render the color of a ray from the 5D neural radiance representation starts with the principles from classical volume rendering. NeRF numerically estimates the integral using quadrature (the process of determining the area of a plane geometric figure by dividing it into a collection of shapes of known area and then finding the limit of the sum of these areas. - Britannica), especially the stratified sampling approach, which randomly samples a point within each N evenly-spaced bins. This technique allows the MLP to be evaluated at continuous positions during the training process. The resulting calculation can expressed as:

Optimization; Achieving State-of-the-Art Quality

To enable representing high-resolution complex scenes, the authors improved the method by applying two further approaches: positional encoding and hierarchical sampling. Positional encoding is a way to map the inputs to a higher dimensional space so that it could fit better with data that contains high frequency variation. Note that deep networks are biased towards learning lower frequency functions. Hierarchical sampling is a way to increase rendering efficiency by allocating samples proportionally to their expected effect on the final rendering.

'View Synthesis' 카테고리의 다른 글

| SPIn-NeRF: 3D Inpainting while enforcing viewpoint consistency (0) | 2024.03.08 |

|---|